When we talk about data accessibility in terms of critical thinking, a question we often ask our clients is: Why do you think access to your critical data is important? The answer is rarely obvious and most often that the client typically doesn’t even have access to critical data, or else doesn’t have access to it in time to take action.

At this point, many solution providers will immediately jump to the “How” of their solution, and dive into all the cool features and bells and whistles, all without fully understanding why accessing data efficiently is important to their client, much less what decision the Client needs it for. Eliciting this answer informs your Client’s most important and relevant use cases around which a new approach makes perfect sense. So let’s give it some context before we dive into the “how.”

Accessing your data efficiently is about more than just finding and manipulating data from a single source or even heterogeneous platforms. Decision makers need to access the data to develop and deploy business strategies. Even more importantly, we need to access critical data in time to take tactical action when warranted. The ability to see your business data in real-time also lets you check on the progress of a decision.

Data Virtualization is one solution to get at your critical data in real-time, even if it is scattered across multiple sources and platforms.

The first thing you need to know as a Client is that you won’t have to recreate the wheel and replace one cumbersome approach with different cumbersome approaches.

Connectors, connectors, connectors.

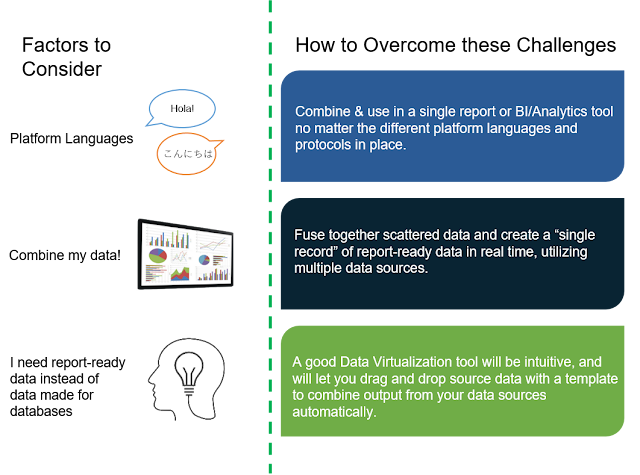

Typical elements of a useful Data Virtualization paradigm are up-to-date “Out of the Box” data connectors, platform specific protocols, useful API protocols and API-specific coding languages and thought to whether modified data would be written back to a source or new data-set.

An example of the latter would be updating your balance in a Customer Care web application after making a payment online. In this example, the following is assumed:

- There are 5 offices across the world. They all speak different languages and have different platform protocols

- Currently data is scattered in various sources (i.e. cloud, excel, and data marts)

- It takes too much time to combine the scattered data sources into one report. Transformation is a long process (with many steps) that turn raw data into end-user-ready data (report-ready for short).

With the right Data Virtualization tool, or Data Integration platform, you can consume data in real time. Without waiting for IT, without making copies, and without physically moving the copied data.

Since Data Virtualization reduces complexity, reduces spend on getting at and using your data, reduces the lag from requesting data to using data, reduces risk from moving and using stale data, and increases your efficiency as a decision maker, data virtualization is almost certainly the path to take here.

This is a guest blog post and the author is annonymous.

Since Data Virtualization reduces complexity, reduces spend on getting at and using your data, reduces the lag from requesting data to using data, reduces risk from moving and using stale data, and increases your efficiency as a decision maker, data virtualization is almost certainly the path to take here.

This is a guest blog post and the author is annonymous.